Aligning the Prokudin-Gorskii photo collection

Ansh Chaurasia

CS 180 Project 1

Project Overview

Sergei Mikhailovich Prokudin-Gorskii was a Russian photographer who pioneered color photography in the early 20th century. He took thousands of color pictures, each a series of three exposures of a scene onto a glass plate using red, green, and blue filters.

Each of these plates have been digitized as a single image containing the different plates aligned vertically on each other, with the blue plate on top, the green plate in the middle, and the red plate on the bottom.

The goal of this project is to take the digitized versions of those glass plates and to employ image processing techniques to align the plates (via translations/displacement) to produce a full color photograph with as few artifacts as possible.

The Images to Test

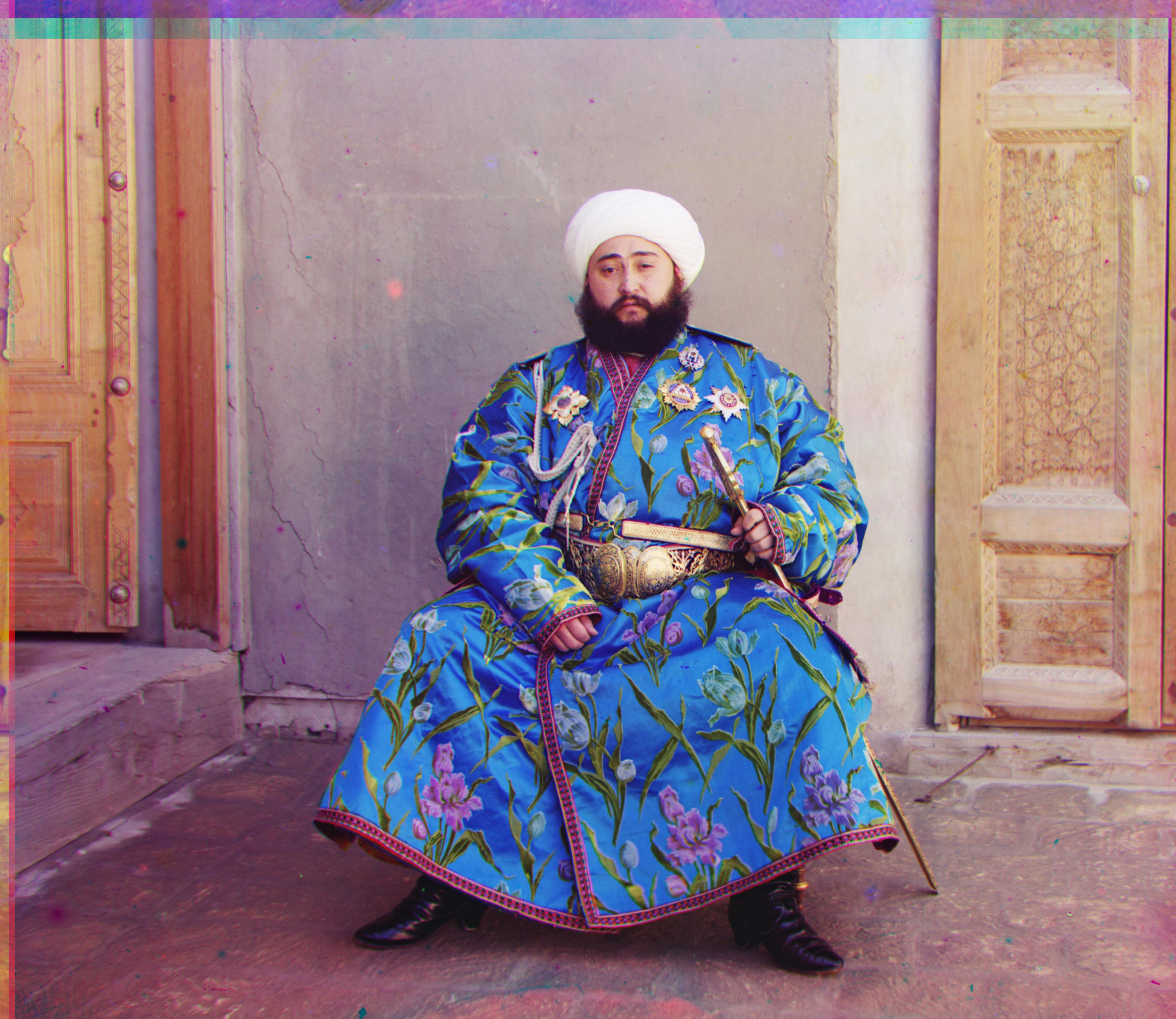

Emir

|

Church

|

Lady

|

Melons

|

Tobolsk

|

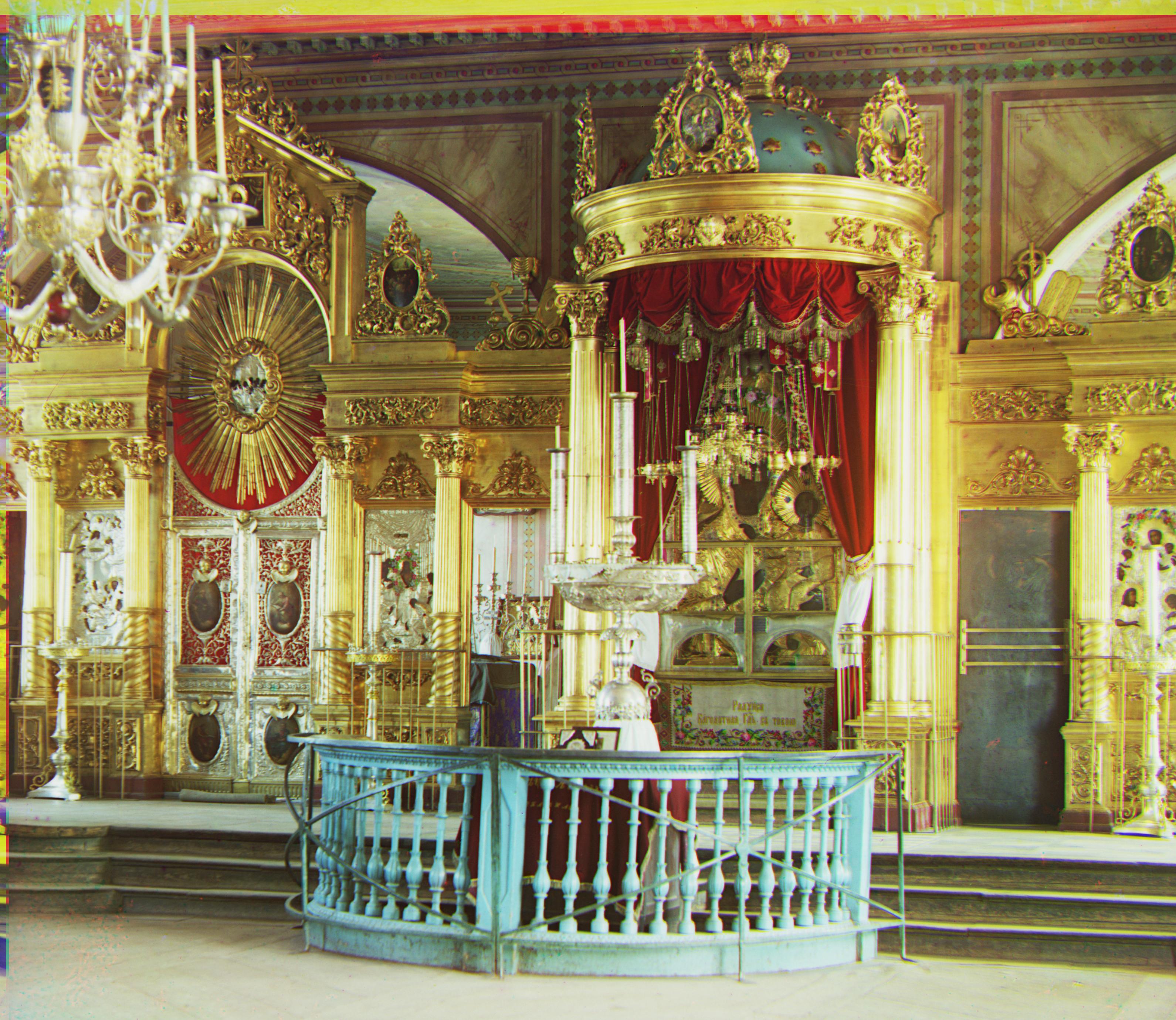

Icon

|

Sculpture

|

Three Generations

|

Train

|

Self Portrait

|

Onion Church

|

Monastery

|

Harvesters

|

Cathedral

|

Approach

Originally, we started out with a simple algorithm; try a small range of displacements between [-15, 15], and pick the result that would best minimize L2 (Euclidean distance). However, we quickly found that L2 was too "absorbed" in the pixel information, i.e not as resilient to changes in brightness, which led to worse offset selections for alignment. For the sake of clarity, when we mean offset, we are referring to an x/y vector (deltaX, deltaY) from which we shift our desired image (im1) to align (to the target image, referred to as im2 in our align algorithm).

The first change we ended up making was to swap the L2 distance method out for normalized cross correlation. This helped improve the output results but, still resulted in extremely misaligned images. Notably, the majority of the work then shifted to debugging the 'emir' image using the pyramid algorithm - here, the red image was very evidently misaligned. Chasing alignment down the alley, we applied advice from other students on Ed that a manual crop of ~15% removed some of the color excessive-borders that would pop up on the sides. We did see that this improve alignment, but the tunnel-ending light was still very far away.

We also noticed that the run took quite a bit of time. While the recommended approach was to create a gaussian image pyramid, we believed that this idea was for suckas. So instead, we progressively sampled patches of some size R, and aligning on that until there was evidence that the score was marginally increasing - i.e if the progressive differences between score increases was under a value eps, we would quit displacement testing. This did provide ~1.5x speedup, but we weren't really clawing at the root of the issue, which was the massive displacement window.

Swallowing our egos, we admitted that we needed to slash the displacement window, and the clearest way highlighted by Ed users seemed to be ... a pyramid search. With the pyramid search in place, we saw the improvement in alignment, as well as a SIGNIFICANT speedup - a program that would take minutes now would now execute in under thirty seconds.

Picture of the Overall Algorithm

function align(im1, im2, D, min_resolution):

best_offset ← (0, 0)

best_score ← -∞

total_pixels ← width(im1) * height(im1)

gaussian_pyramid ← create_gaussian_pyramid(im1, im2, min_resolution)

if gaussian_pyramid is empty:

gaussian_pyramid ← [(im1, im2)]

diff_x ← range(-D / 2, D / 2 + 1)

diff_y ← range(-D / 2, D / 2 + 1)

for each (img1, img2) in gaussian_pyramid:

prod ← cartesian_product(diff_x, diff_y)

best_offset ← (2 * best_offset[0], 2 * best_offset[1])

sampled_im1 ← sobel_filter(img1)

sampled_im2 ← sobel_filter(img2)

shifted_im1 ← shift_image(sampled_im1, best_offset)

for each (a, b) in prod:

shifted_current_im1 ← shift_image(shifted_im1, (a, b))

score ← metric(shifted_current_im1, sampled_im2)

if score > best_score:

best_score ← score

best_offset ← (a, b)

diff_x ← range(best_offset[0] - 1, best_offset[0] + 1)

diff_y ← range(best_offset[1] - 1, best_offset[1] + 1)

aligned_im1 ← shift_image(im1, best_offset)

return aligned_im1, best_offset, best_score

Results

Single Scale Implementation

Cathedral

Red Offset of (+4, +12)

Green Offset of (+4, +4)

|

Tobolsk

Red Offset: (+4, +8)

Green Offset: (+4, +4)

|

As seen, the single scale implementation is able to achieve alignment in the majority of the area. However, there is an annoying bit of border left floating at the top, excess from the 15%-on-all-sides crop we performed.

Multi Scale Pyramid Implementation

Emir

Red Offset: (+40, +104)

Green Offset: (+24, +48)

|

Lady

Red Offset: (+8, +120)

Green Offset: (+8, +56)

|

Melons

Red Offset: (+0, +192)

Green Offset: (+0, +96)

|

Sculpture

Red Offset: (-32, +128)

Green Offset: (+0, +32)

|

Icon

Red Offset: (+32, +96)

Green Offset: (+32, +32)

|

Church

Red Offset: (+0, +64)

Green Offset: (+0, +32)

|

Three Generations

Red Offset: (+0, +96)

Green Offset: (+0, +64)

|

Train

Red Offset: (+32, +96)

Green Offset: (+32, +64)

|

Self Portrait

Red Offset: (+32, +160)

Green Offset: (+0, +16)

|

Onion Church

Red Offset: (+32, +96)

Green Offset: (+32, +64)

|

Monastery

Red Offset: (+4, +4)

Green Offset: (+0, -4)

|

Harvesters

Red Offset: (+0, +128)

Green Offset: (+32, +64)

|

We see the best results in the picture with the melons, with the only evidence alignment coming from the border from the top. The second best comes in the statue, which does

have a little bit of noticable color jitter and that yet-too-annoying band of red residue, but aligned for the majority. However, the issue of the "color band" at the top still remains.

While I attempted to remove this color band, I was unsuccessful in trying to patch it. Professor Efros, after lecture, recommended to try correlating the three channels in this area - one thing that I was

interested in trying, but not too sure how to implement.

Custom Image Bonanza

Sirenʹ v Gatchinskom parki︠e︡

Red Offset: (+32, +64)

Green Offset: (+32, +32)

|

Ėtrusskīi︠a︡ vazy. V Ėrmitazhi︠e︡ v SPeterburgi︠e︡

Red Offset: (+0, +96)

Green Offset: (+0, +0)

|

Bells and Whistles

Minor improvements with Major Results - Resolution Bashing

Initially, some of the images still turned out to be misaligned. I accidentally stumbled upon the fact that changing the resolution at which I do exhaustive displacement search from (128, 128)

to (512, 512) - a larger resolution - improved results with negligible runtime downsides. However, when I did this, the melons image drastically dropped in alignment performance. In order to get the best of both worlds,

I ran a grid search through 3 resolutions - (128, 128), (256, 256), and (512, 512), and chose the image with the best average (across green and red) metric value.

Metric Composition

The final metric ended up being a weighted average aggregation of the Normalized Cross Correlation (on the image) and the Structural Similarity Image Metric (SSIM).

While I attempted to integrate in gradient based methods, such as computing gradients from an image passed through the Sobel filter, those didn't end up going very far, as they regularly

killed alignment. Here's an example of how the emir alignment might look with a weighted average of the three.

Gradient Based Metric Result for Emir

Before (with only NCC)

|

After (with Sobel gradients)

|

Before (with only NCC)

|

After (with Sobel gradients)

|

Notice the color jitter now showing? That was the issue issue with the sobel gradients. In retrospect, I noticed that this issue happened specifically with the Emir and for the SSIM and Sobel Edge Gradient method, so neither one really helped or hurted.

It would be interesting to see if there is a way to precisely determine criterion for solely using NCC vs a combination with NCC + Sobel/SSIM (below).

SSIM Based for Emir

Before (with only NCC)

|

After (with SSIM )

|

A similar story pops up with the SSIM - minor misalignment. I suspect that a weighted combination of the two would probably be the best, with the unverified hope that SSIM would be able to pick up feature

based alignment that the NCC wouldn't be able to. Then again, we could always grab a large Halloween bag of images and try the Frechet Inception Distance FID ... why not bring a bazooka to a knife fight?